Enterprise Virtualization And Consolidation Using KVM (Kernel Based Virtual Machine)

Architectural Design, Performance Engineering, and High-Availability Strategy

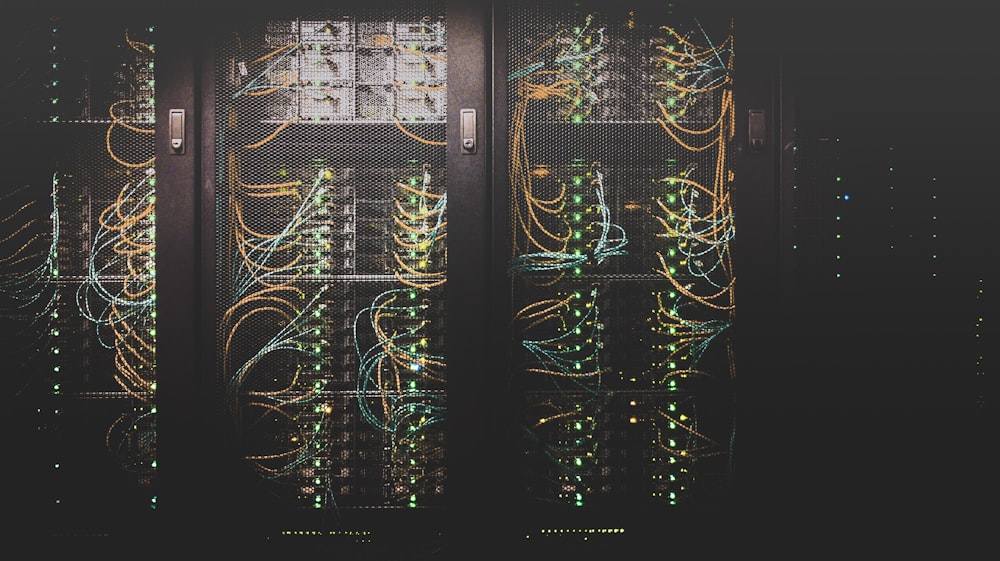

Virtualization in enterprise Linux environments has evolved beyond simple hardware abstraction. Today, it is a foundational architectural layer that underpins workload isolation, high-availability clustering, private cloud orchestration, and infrastructure automation.

Kernel-Based Virtual Machine (KVM) has emerged as a production-grade hypervisor embedded directly within the Linux kernel, enabling Linux to function as a Type-1 hypervisor while preserving the flexibility and control of the host operating system.

Architectural Design, Performance Engineering, and High-Availability Strategy

Virtualization in enterprise Linux environments has evolved beyond simple hardware abstraction. Today, it is a foundational architectural layer that underpins workload isolation, high-availability clustering, private cloud orchestration, and infrastructure automation.

Kernel-Based Virtual Machine (KVM) has emerged as a production-grade hypervisor embedded directly within the Linux kernel, enabling Linux to function as a Type-1 hypervisor while preserving the flexibility and control of the host operating system.

KVM Architecture: Kernel-Level Virtualization

KVM converts the Linux kernel into a hypervisor by leveraging hardware virtualization extensions such as:

- Intel VT-x / VT-d

- AMD-V

The hypervisor layer operates through:

- kvm.ko – core virtualization module

- kvm-intel.ko or kvm-amd.ko – CPU-specific modules

Each virtual machine is implemented as a standard Linux process managed by the scheduler. This design allows KVM to inherit:

- Linux memory management

- Process scheduling

- NUMA awareness

- cgroups resource control

- SELinux security enforcement

User-space interaction is handled via QEMU, which provides device emulation, storage control, and VM lifecycle management. When combined with virtio drivers, paravirtualized I/O performance approaches near-native throughput.

Enterprise Consolidation Framework

Server consolidation using KVM is not a lift-and-shift exercise. It requires architectural modeling and performance validation.

A structured consolidation methodology typically includes:

1. Baseline Performance Profiling

- CPU utilization trends

- I/O latency benchmarks

- Memory footprint analysis

- NUMA topology evaluation

- Network throughput measurement

Without accurate baselining, consolidation introduces resource contention risk.

2. Resource Allocation Strategy

Enterprise-grade KVM deployments rely on disciplined resource partitioning:

- vCPU pinning for performance-sensitive workloads

- HugePages allocation for memory-intensive VMs

- NUMA-aligned VM placement

- CPU overcommit ratio modeling

- I/O scheduler tuning

Improper allocation may result in unpredictable latency and degraded workload performance.

Storage Architecture Considerations

Storage design is often the most critical factor in virtualization performance. Enterprise KVM environments may integrate:

- SAN-backed block storage

- iSCSI targets

- NFS-backed VM images

- Ceph distributed storage

- LVM thin provisioning

Key considerations include:

- Write amplification

- IOPS saturation thresholds

- Latency under concurrent VM workloads

- Cache tuning (write-back vs write-through)

- Storage redundancy and replication

Performance degradation in virtualized environments is frequently storage-bound rather than CPU-bound.

Networking Design in Virtualized Clusters

Enterprise KVM networking is typically built using:

- Linux bridges

- Open vSwitch

- VLAN segmentation

- Bonded NICs for redundancy

- SR-IOV for performance-sensitive workloads

Network isolation must be carefully engineered to ensure tenant separation, compliance alignment, and failover continuity.

High Availability and Cluster Design

Production-grade virtualization requires host-level redundancy and automated failover. High-availability frameworks may incorporate:

- Pacemaker/Corosync clustering

- Shared storage with fencing mechanisms

- Live migration using virsh migrate

- HA load distribution policies

- Redundant power and network paths

Proper quorum configuration and fencing strategy are essential to prevent split-brain conditions in clustered environments.

Performance Engineering in KVM Environments

Achieving near-native performance requires kernel-level tuning and validation. Key optimization strategies include:

- Enabling virtio-net and virtio-blk drivers

- CPU isolation using isolcpus

- Tuning kernel scheduler parameters

- Disabling unnecessary host services

- IRQ balancing optimization

- Transparent HugePages configuration

Workloads such as databases and real-time applications demand deterministic latency, which must be validated under sustained load conditions.

Security Isolation and Governance

Enterprise KVM deployments require layered security controls:

- SELinux enforcement

- sVirt isolation

- Role-based access via libvirt

- Secure API access

- Network segmentation

Guest isolation must be architected to mitigate lateral movement risk within virtual clusters.

Disaster Recovery and Replication Strategy

Virtualization simplifies recovery modeling but does not eliminate the need for structured DR planning. Common enterprise strategies include:

- VM snapshot scheduling

- Block-level replication

- Asynchronous replication across sites

- Cold and warm standby clusters

- Automated failover testing

Recovery Time Objective (RTO) and Recovery Point Objective (RPO) must be defined and validated through simulation exercises.

KVM in Modern Enterprise Infrastructure

KVM serves as a foundational layer for:

- OpenStack-based private cloud

- Hybrid cloud integrations

- Kubernetes host virtualization

- Infrastructure-as-Code workflows

- DevOps pipeline environments

When integrated with orchestration frameworks, KVM enables scalable, API-driven infrastructure provisioning.

Strategic Perspective

Enterprise virtualization using KVM must be treated as a controlled infrastructure discipline — not a simple hardware consolidation mechanism. Architectural misalignment, improper resource modeling, and lack of governance can introduce systemic instability across production workloads.

Conversely, a structured KVM deployment provides:

- Efficient hardware utilization

- Predictable workload performance

- Controlled multi-tenant isolation

- High-availability failover

- Reduced operational complexity

As infrastructure density increases, architectural precision becomes non-negotiable.